Amazon Picking Challenge

Robotic Perception and Manipulation, Fall 2015 - Summer 2016

I building a robotic system that can aid in Amazon's order fulfillment process. This robot placed 6th and 7th in the two parts of the 2016 Amazon Picking Challenge held in Leipzig, Germany. The first challenge, the picking task, was to pick items off of warehouse shelves and place the items into an order bin. The second challenge, the stowage task, was to pick items out of a cluttered tote and place them back on a warehouse shelf. For detailed information about our implementation and engineering process, please visit our team's website. I was responsible for leading the perception system design. My additional responsibilities included the mechanical design of a suction based gripper, coding localization algorithms for identifying shelf position, and programming grasping algorithms to pick items out of a cluttered bin based on surface normal and point cloud based segmentation. We designed this system around the UR5 robotic manipulator. Videos below show the UR5 performing both the picking and the stowage task. Here is a set of slides outlining the system.

Picking Task

Perception Subsystem

My primary responsibility for the picking task was the perception system. The perception system has two main components: the first was shelf localization and the second was item localization. Item localization consisted of identifying a target item in a bin cluttered with up to 10 items. A Kinect RGBD depth sensor was mounted to the wrist of the UR5. Static images and depth clouds were collected and analysed using both geometric methods and convolutional neural network to shelf and item location. Skeletal code for item identification can be found on my github page.

The localization system used constrained ICP algorithms to match the point clouds collected from multiple perspectives to a CAD model of the Kiva shelf. A video of our preliminary localization system can be found here on youtube.

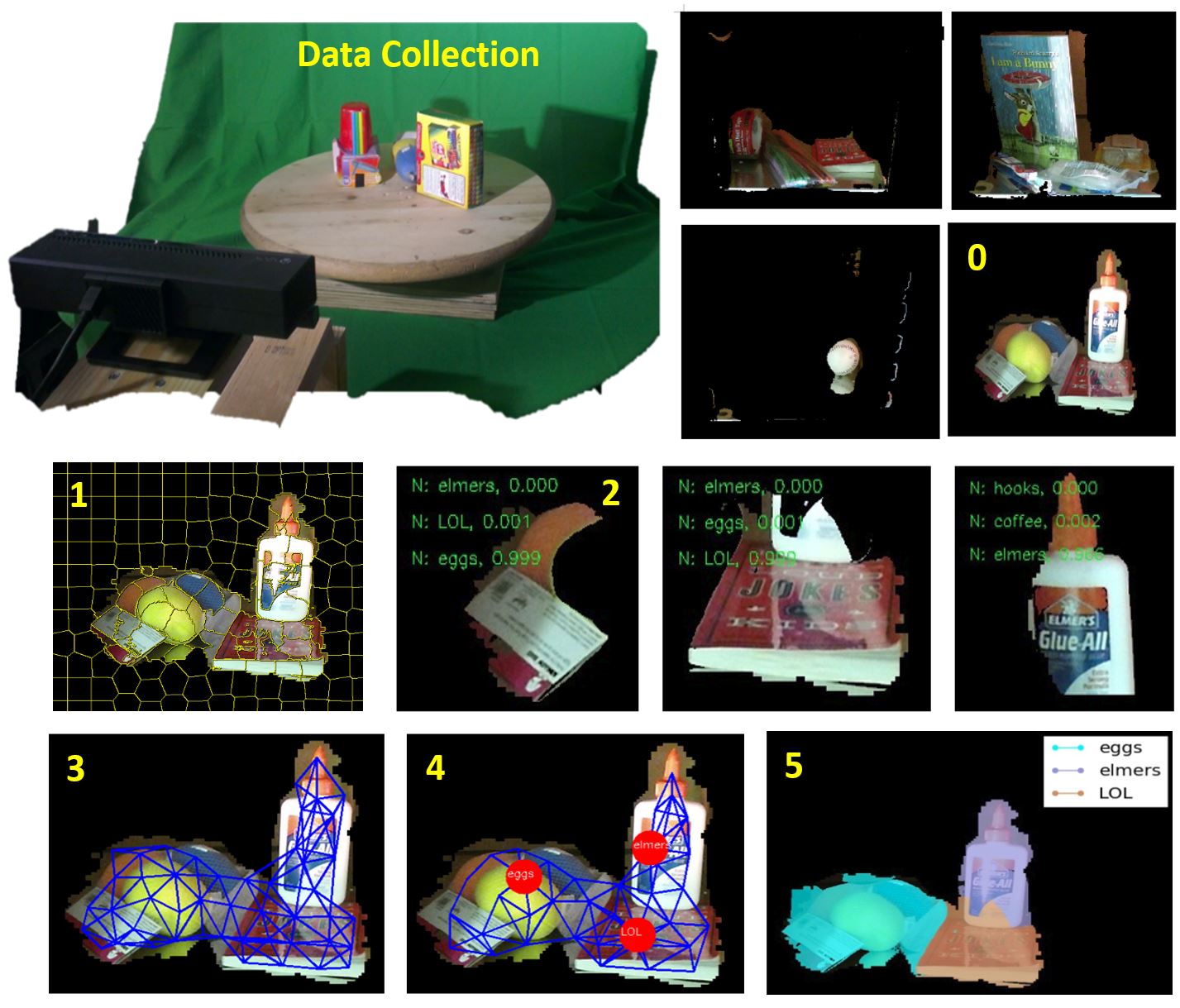

The item identification pipeline consisted of several steps, outlined in the image below. First, the items are segmented from the background shelf, step 0. Depth infilling is used to fill in all holes in the pointcloud. Then, using the localized shelf, we remove all points which fall outside these bounds and apply a mask to the RGB image. Next, we use the SLIC clustering algorithm (step 1) to divide the image into segments. After this, each superpixel is classified (step 2) using a CNN based on the Alexnet framework. The assumption is that each superpixel will contain only a single item. After classifying each superpixel, a graph is created (step 3) based on adjacent clusters. Conditional random field techniques are used to determine maximum likelihood nodes (step 4) for each item in the scene. By predicting every item in the scene, confidence is increased about the target item. Finally, each pixel is labeled and a mask is applied to the point cloud to determine ideal grasping surfaces.

Data was collected for each item using a turntable and an actuated Kinect. About 200 images were captured of each of the 38 items. SLIC was applied to each of these images, as well as scale, color, and rotation distortion to generate 400,000 training images for the CNN.

This method was used for several reasons. First, we did not have the manpower to collect training images on our own, of multiple object scenes. Thus, we needed a method that allowed for training images to be collected in isolation but that allowed for prediction on cluttered scenes. Similarly, we needed a method that handled significant occlusions. Even when an item is partially occluded, we can still classify individual superpixels corresponding to the item. This method worked very well on shelves with 6 or less items. However, on more cluttered shelves, it was difficult to tune the optimization step to make accurate overall predictions.

Perception Pipeline -- Data collection, item segmentation (0), SLIC clustering (1), superpixel classification (2), graph generation (3), graph search (4), and cluster by cluster labeling (5)

Stowage Task

My primary responsibilities for the stowage task were the perception system and the grasping system. Please see our team website for more details at this time.